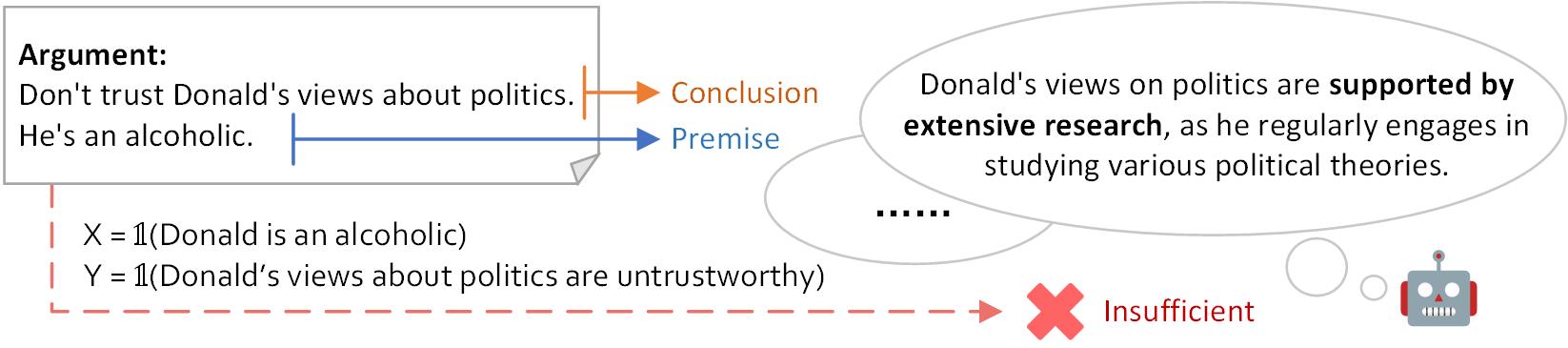

Argument Sufficiency Assessment is the task of determining if the premises of an argument support the conclusion sufficiently. Previous works train classifiers based on human annotations. However, the sufficiency criteria are vague and subjective among annotators.

An example of the argument sufficiency assessment task

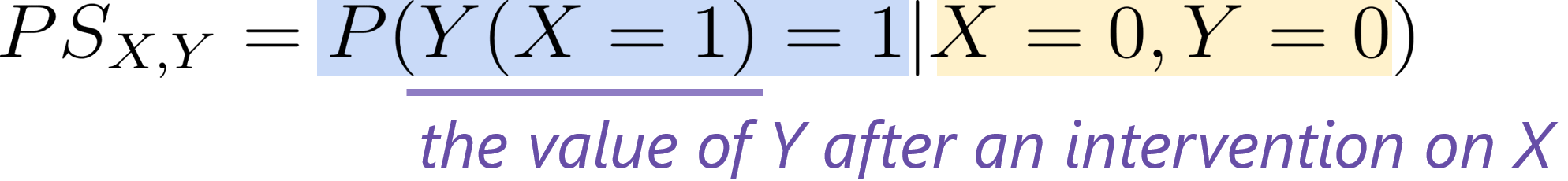

To tackle the problem, We propose  CASA, a zero-shot Causality-driven Argument Sufficiency Assessment framework by formulating the task with Probability of Sufficiency (PS), a concept borrowed from causality:

CASA, a zero-shot Causality-driven Argument Sufficiency Assessment framework by formulating the task with Probability of Sufficiency (PS), a concept borrowed from causality:

PS quantifies the probability that introducing X would produce Y in the case where X and Y are in fact absent.

To measure PS of a given argument, there presents the following challenges:

CASA Framework

CASA Framework

An example of the detailed reasoning process of  CASA (LLAMA2) on BIG-bench-LFD.

CASA (LLAMA2) on BIG-bench-LFD.

An example of the detailed reasoning process of  CASA (TULU) on Climate.

CASA (TULU) on Climate.

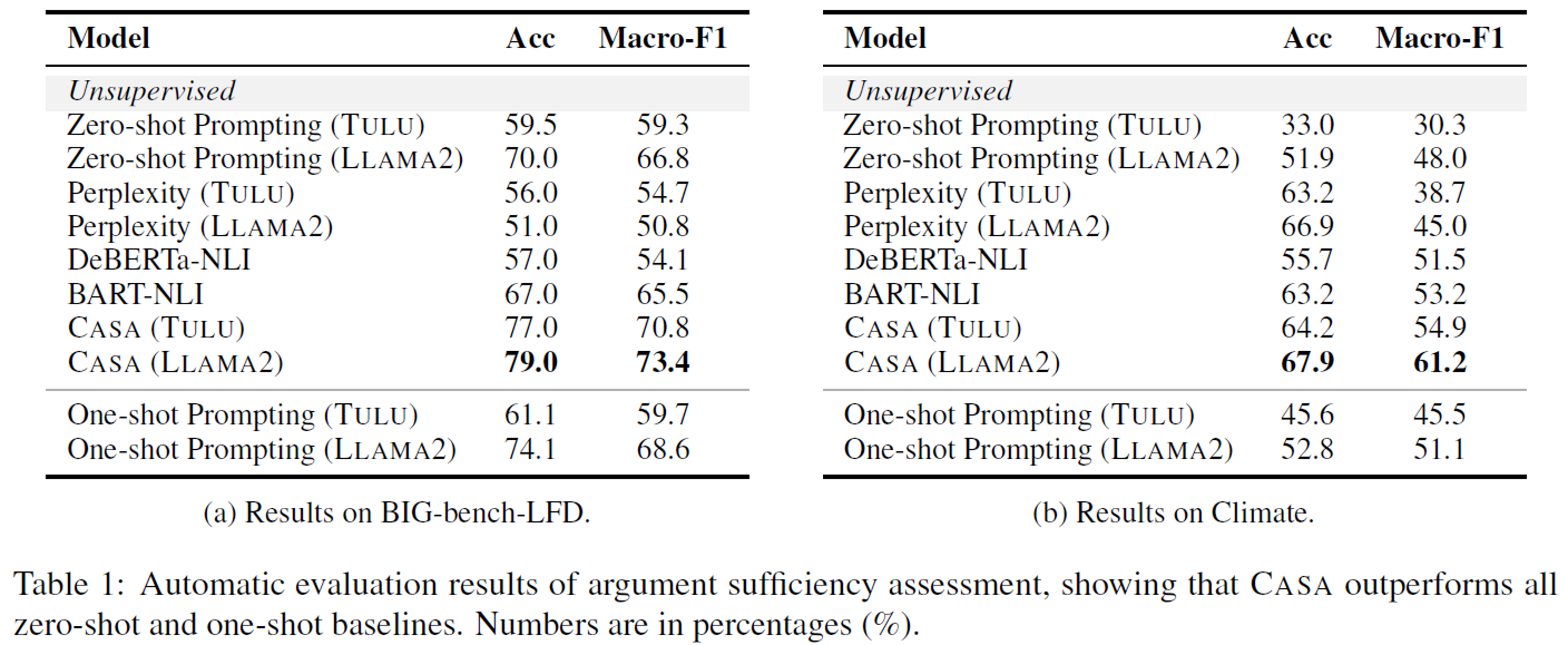

We first compare  CASA with baseline methods on two logical fallacy detection datasets, BIG-bench-LFD and Climate. We find that CASA significantly outperforms all the corresponding zero-shot baselines with significance level α = 0.02, and also surpasses the one-shot baselines.

CASA with baseline methods on two logical fallacy detection datasets, BIG-bench-LFD and Climate. We find that CASA significantly outperforms all the corresponding zero-shot baselines with significance level α = 0.02, and also surpasses the one-shot baselines.

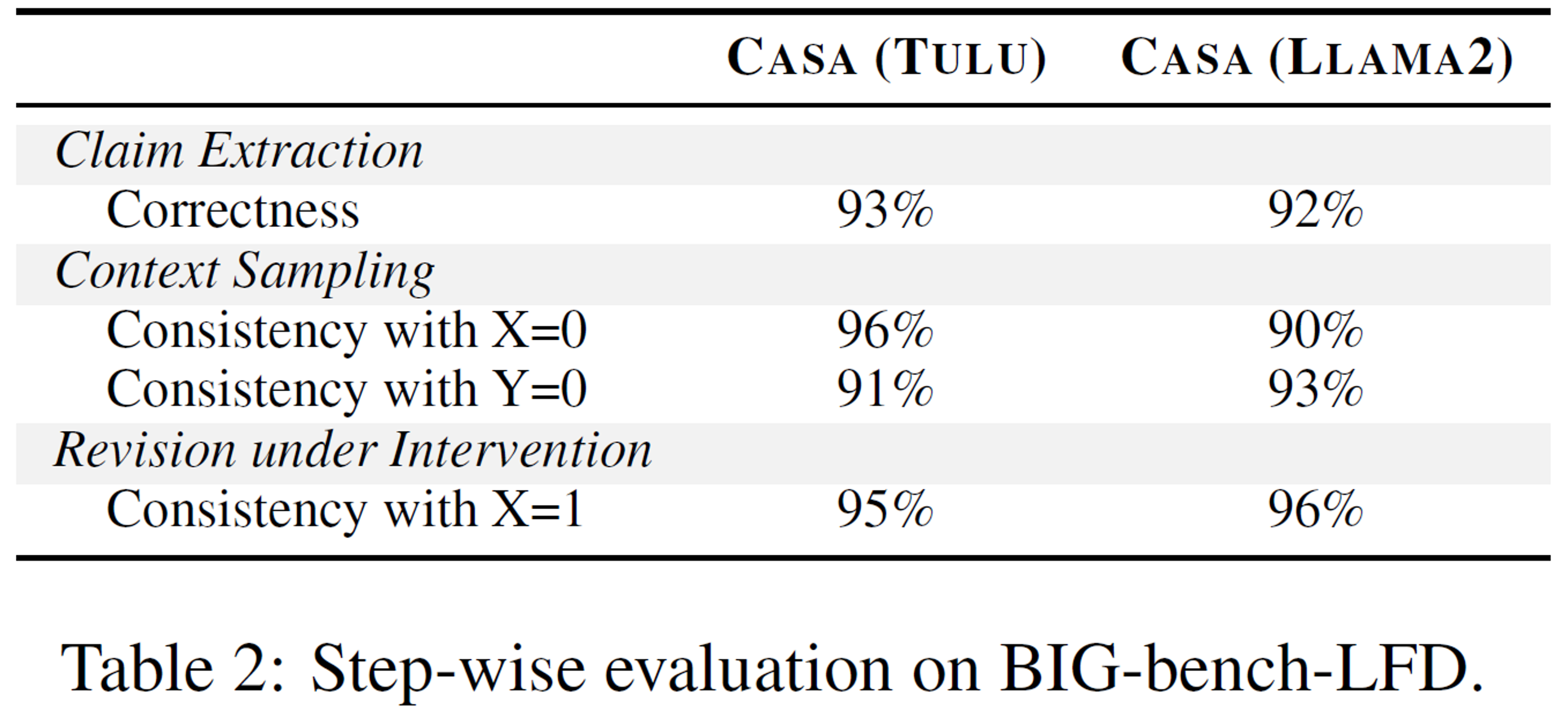

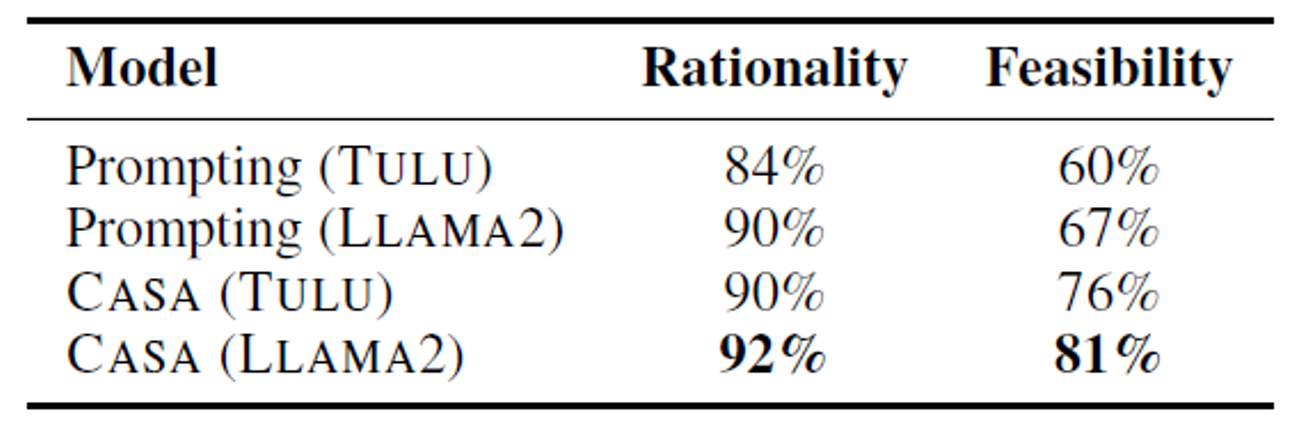

To examine whether LLMs work as we expect in each step of  CASA, we conduct step-wise human evaluation. We ask human annotators to rate three aspects individually: 1) In the claim extraction step, do LLMs extract the correct premises and conclusion from the argument? 2) In the context sampling step, are the contexts generated by LLMs consistent with ¬Premise and ¬Conclusion? 3) In the revision step, are the revised situations consistent with the Premise?

CASA, we conduct step-wise human evaluation. We ask human annotators to rate three aspects individually: 1) In the claim extraction step, do LLMs extract the correct premises and conclusion from the argument? 2) In the context sampling step, are the contexts generated by LLMs consistent with ¬Premise and ¬Conclusion? 3) In the revision step, are the revised situations consistent with the Premise?

The accuracy of all aspects is above 90%, exhibiting that LLMs are capable of generating textual data that conform to certain conditions, and making interventions on situations in the form of natural language.

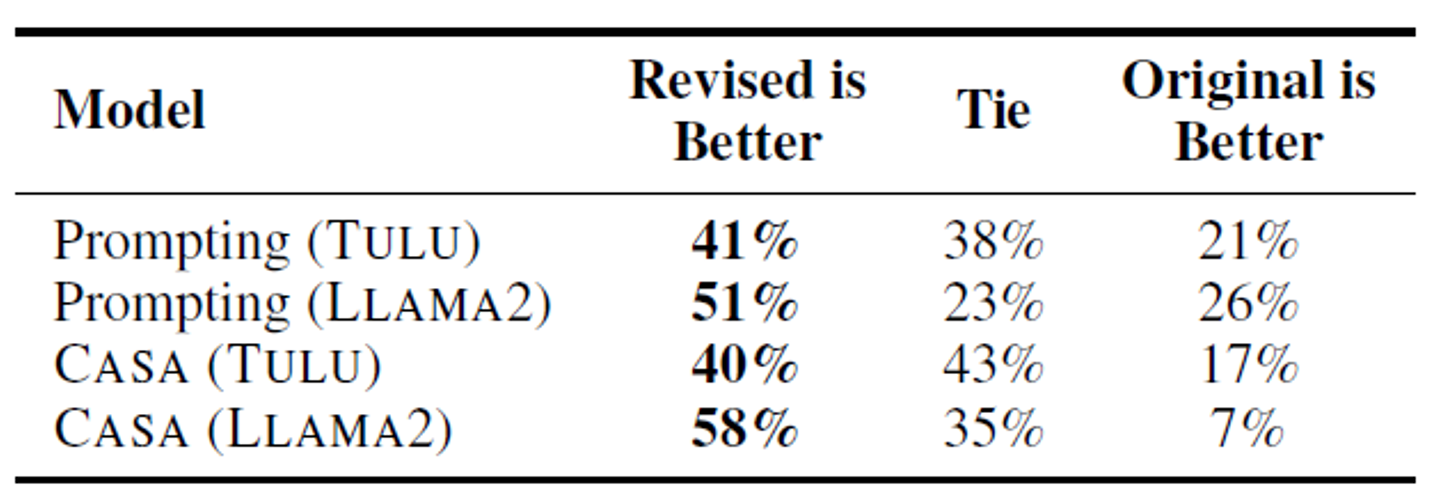

We apply  CASA to a realistic scenario: providing writing suggestions for essays written by students. If CASA identifies that an argument in an essay is insufficient, we extract explainable reasons from CASA’s reasoning process, and provide them as suggestions for revision.

CASA to a realistic scenario: providing writing suggestions for essays written by students. If CASA identifies that an argument in an essay is insufficient, we extract explainable reasons from CASA’s reasoning process, and provide them as suggestions for revision.

Specifically, we generate objection situations (situations that challenge the sufficiency of the argument) out of intervened situations R that contradict the Conclusion, by removing the Premise from R.

CASA capable of generating rational and feasible objection situations to the essays?

CASA capable of generating rational and feasible objection situations to the essays?

Compared with directly prompting the base model, objection situations generated by CASA are more rational and feasible. The gap in feasibility is larger, as LLMs are likely to generate abstract objections when prompting, while CASA provides more practical objections which are easier to address.

Question 2: Will revising based on the generated objection situations improve the sufficiency of the essays?

In both methods tested, the Revised is Better proportion supersedes the Original is Better proportion, emphasizing an improvement in writing sufficiency. On the other hand, with the same base model,  CASA obtains a higher Revised - Original ratio (the Revised is Better proportion minus the Original is Better proportion) compared to the prompting method. This suggests that, even if we do not consider the difficulty of revision, CASA helps more in the revision process.

CASA obtains a higher Revised - Original ratio (the Revised is Better proportion minus the Original is Better proportion) compared to the prompting method. This suggests that, even if we do not consider the difficulty of revision, CASA helps more in the revision process.

@article{liu2024casa,

title={CASA: Causality-driven Argument Sufficiency Assessment},

author={Liu, Xiao and Feng, Yansong and Chang, Kai-Wei},

journal={arXiv preprint arXiv:2401.05249},

year={2024}

}